Download for your Windows

Anti-bot systems protect websites from harmful automated interactions, like spam and DDoS attacks. However, not all automated activities are harmful. Bots are often essential for security testing, building search indexes, and gathering public data. Anti-bot systems gather extensive data about each visitor to spot non-human patterns. Suppose anything about a visitor's behavior, network, or device setup seems unusual. In that case, they may be blocked or face a CAPTCHA to confirm they're human. Anti-bot detection usually works across three levels: Network Level: Anti-bot systems analyze the visitor's IP address, checking if it's associated with spam, data centers, or the Tor network. They also inspect packet headers. IP addresses on "blacklists" or with high spam scores often trigger CAPTCHAs. For instance, using a free VPN can sometimes lead to CAPTCHA challenges on Google. Browser Fingerprint Level: These systems collect details about the visitor's browser and device, building a digital fingerprint. This fingerprint can include browser type, version, language settings, screen resolution, window size, hardware configurations, system fonts, and more. Behavioral Level: Advanced anti-bot systems analyze user behavior, like mouse movements and scrolling patterns, to compare with regular visitor activity. There are many anti-bot systems, and the specifics of each can vary greatly and change over time. Popular solutions include: Akamai Cloudflare Datadome Incapsula Casada Perimeterx Knowing the type of anti-bot system a website uses can help you find the best way to bypass it. You can find helpful tips and methods for avoiding specific anti-bot systems on forums and Discord channels like The Web Scraping Club. To see a site's anti-bot protection, you can use tools like the Wappalyzer browser extension. Wappalyzer shows a website's different technologies, including anti-bot systems, making it easier to plan how to scrape the site effectively. To bypass anti-bot systems, you must mask your actions on every detection level. Here are some practical ways to do it: Build a Custom Solution: Create your tools and manage the infrastructure yourself. This gives you complete control but requires technical skills. Use Paid Services: Platforms like Apify, Scrapingbee, Browserless, or Surfsky provide ready-to-go scraping solutions that avoid detection. Combine Tools: Use a mix of high-quality proxies, CAPTCHA solvers, and anti-detect browsers to reduce the chances of being flagged as a bot. Headless Browsers with Anti-Detection Patches: Run standard browsers in headless mode with anti-detection tweaks. This option is versatile and often works for more straightforward scraping tasks. Explore Other Solutions: There are many ways to bypass anti-bot systems, from simple setups to complex multi-layered approaches. Choose the one that fits your task’s complexity and budget. To keep a bot undetected at the network level, use high-quality proxies. You might be able to use your own IP address for smaller tasks, but this won’t work for large-scale data collection. In these cases, reliable residential or mobile proxies are essential. Good proxies reduce the risk of blocks and help you send thousands of requests consistently without being flagged. Avoid using cheap, low-quality proxies that may be blacklisted, as they can quickly reveal bot activity. When choosing proxies for scraping, keep these critical points in mind: Check Spam Databases: Verify that the proxy's IP address isn't flagged in spam databases using tools like PixelScan or Firehol (iplists.firehol.org). This helps ensure the IPs don't look suspicious. Avoid DNS Leaks: Run a DNS leak test to ensure the proxy doesn't reveal your real server. Only the proxy's IP should appear on the server list. Use Reliable Proxy Types: Proxies from ISPs look more legitimate and are less likely to raise red flags than datacenter proxies. Consider Rotating Proxies: These proxies provide access to a pool of IPs, automatically changing the IP with each request or at regular intervals. This reduces the risk of being blocked by making it harder for websites to detect patterns in your bot's activity. These steps will help ensure your proxies are well-suited for large-scale data collection without drawing unwanted attention. Rotating proxies are especially helpful in web scraping. Instead of using a single IP address, they offer access to multiple IPs, which helps disguise bot activity. By switching IP addresses frequently, rotating proxies make it harder for websites to detect patterns in your requests, which lowers the risk of getting blocked. This is particularly useful when a bot needs to send a high volume of requests, as it spreads them across various IPs rather than overloading a single one. Multi-accounting (anti-detect) browsers are ideal for spoofing browser fingerprints, and top-quality ones like Octo Browser take this a step further by spoofing at the browser’s core level. They allow you to create many browser profiles, each appearing as a unique user. With an anti-detect browser, scraping data becomes flexible with automation libraries or frameworks. You can set up multiple profiles with the fingerprint settings, proxies, and cookies you need without opening the browser itself. These profiles are ready for use in automation or manual modes. Using a multi-accounting browser isn’t much different from working with a standard browser in headless mode. Octo Browser even offers detailed documentation with API connection guides for popular programming languages, making the setup easy to follow. Professional anti-detect browsers make it easy to manage multiple profiles, connect proxies, and access data that standard scraping tools can’t reach by using advanced digital fingerprint spoofing. To bypass anti-bot systems effectively, simulating actual user actions is essential. This includes delays, moving the cursor naturally, rhythmic typing, taking random pauses, and showing irregular behaviors. Everyday actions to simulate include logging in, clicking “Read more,” navigating links, filling forms, and scrolling through content. You can simulate these actions with popular open-source automation tools like Selenium or others, such as MechanicalSoup and Nightmare JS. Adding delays with random intervals between requests is helpful to make scraping look more natural. Anti-bot systems analyze network, browser, and behavioral data to block bots. Effective bypassing needs masking at each of these levels: Network Level: Use high-quality proxies, ideally rotating ones. Browser Fingerprint: Use anti-detect browsers like Octo Browser. Behavior Simulation: Rely on browser automation tools like Selenium, adding irregular delays and behavior patterns to mimic human users. These strategies create a robust framework for more secure and efficient web scraping.How Anti-Bot Systems Detect Bots

How to Bypass Anti-Bot Systems?

Network-Level Masking

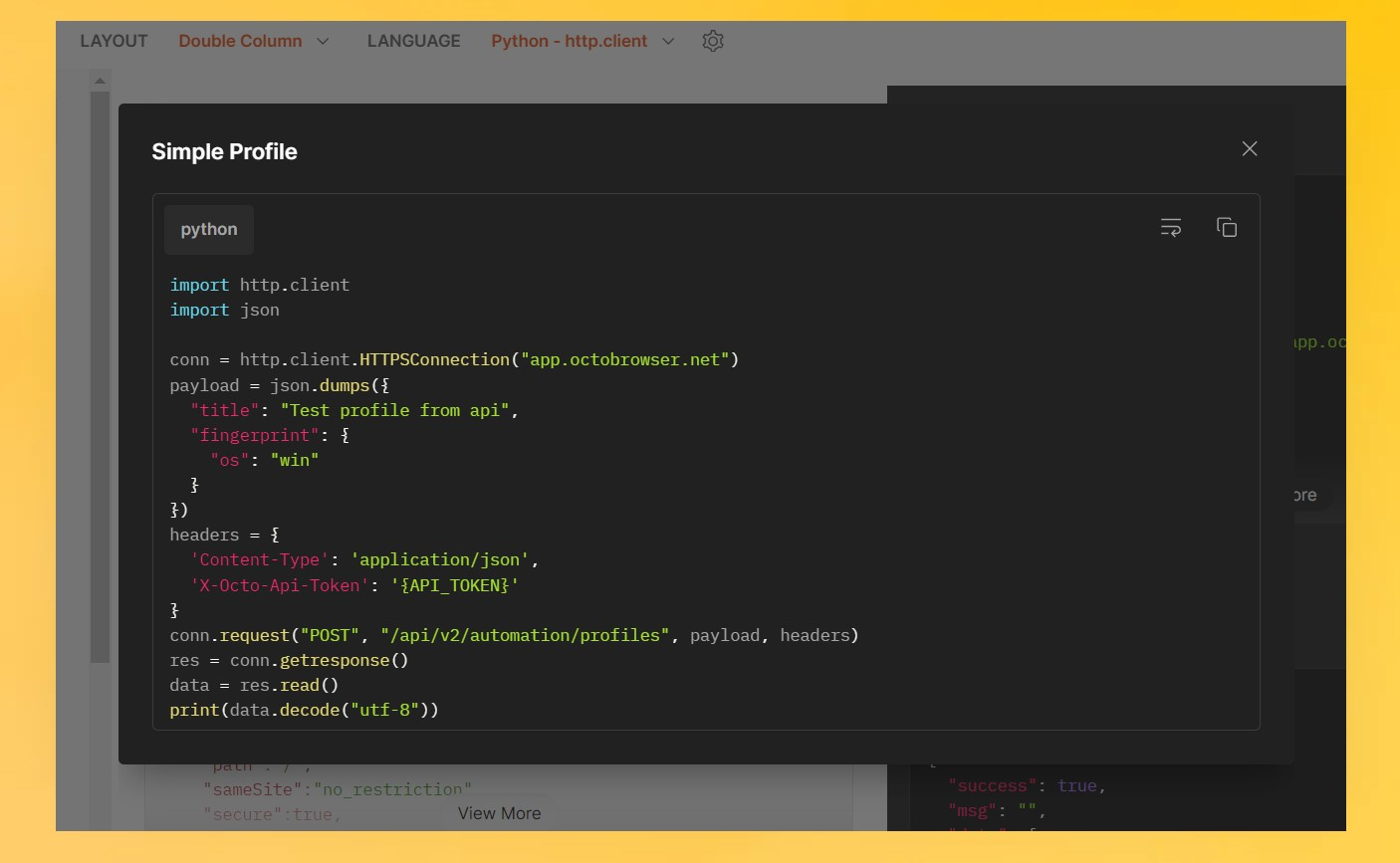

Fingerprint-Level Masking

Simulating Real User Actions

Conclusions